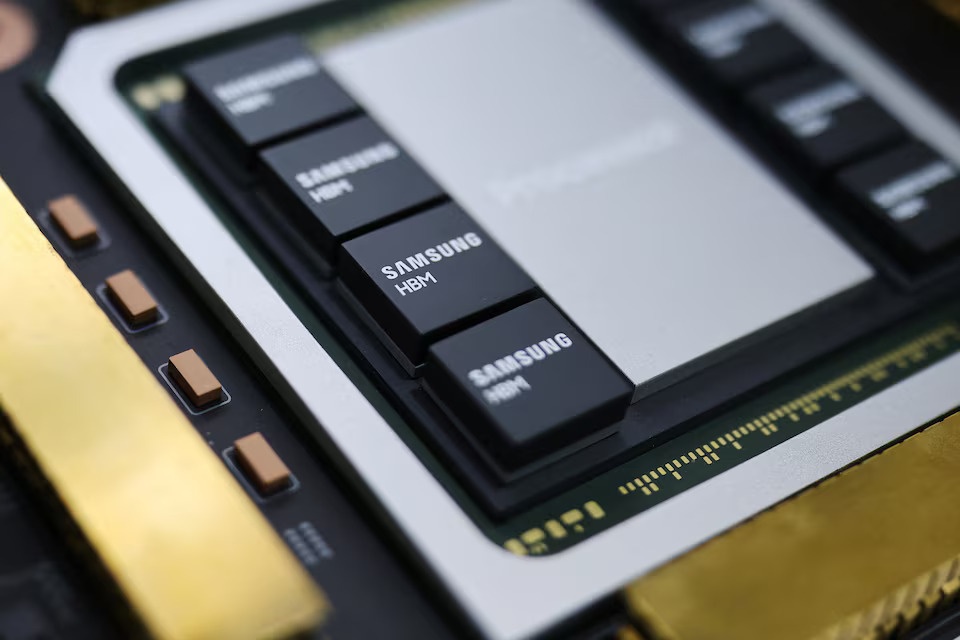

Samsung Electronics has begun shipping its latest HBM4 (High Bandwidth Memory) chips, signaling a renewed push to strengthen its position in the rapidly expanding artificial intelligence hardware market. The move comes as competition intensifies among semiconductor giants racing to supply the advanced memory required for next-generation AI systems.

With AI workloads growing more complex and data-hungry, high-performance memory has become just as critical as GPUs. Samsung’s HBM4 rollout reflects the company’s effort to close the gap with rivals already dominating AI infrastructure supply chains.

Why HBM4 Matters for AI

HBM4 represents a major step forward in memory architecture. Unlike traditional DRAM, high bandwidth memory is stacked vertically, allowing faster data transfer speeds while improving energy efficiency — both essential for AI training and inference.

For companies building large AI models, memory bottlenecks often limit performance more than processing power. Faster memory enables:

-

Larger model training capacity

-

Faster real-time AI processing

-

Reduced power consumption in data centres

-

Improved performance for generative AI applications

As AI systems scale, demand for advanced memory like HBM4 is expected to surge alongside demand for GPUs.

Samsung’s Strategy to Regain Momentum

Samsung has long been a dominant player in memory manufacturing, but in recent AI cycles it has faced stiff competition from other chipmakers supplying high-bandwidth memory solutions.

Shipping HBM4 chips signals an attempt to reposition itself at the center of AI hardware supply. Industry observers see this as part of a broader strategy to strengthen relationships with AI infrastructure partners, including cloud providers and advanced chip designers.

Rather than focusing solely on volume, Samsung appears to be prioritizing performance-focused memory tailored for AI accelerators — a market where margins and strategic influence are growing quickly.

The Bigger Battle in the AI Hardware Ecosystem

The AI race is no longer just about software breakthroughs. It is increasingly shaped by companies capable of manufacturing specialized hardware at scale.

Advanced memory, GPUs, networking chips, and energy-efficient infrastructure now form the backbone of AI development. As AI adoption accelerates globally, supply chain positioning is becoming a competitive advantage in itself.

Samsung’s HBM4 shipments highlight how semiconductor companies are shifting from traditional consumer electronics cycles toward long-term AI infrastructure demand.

What This Means for the Industry

If Samsung’s HBM4 chips gain strong adoption, the move could reshape competition across the AI hardware market by diversifying supply beyond existing dominant players.

For AI developers and cloud platforms, increased competition among memory suppliers may help stabilize pricing and reduce dependency on a limited number of vendors. For investors, it reinforces the idea that memory technology — once considered a commodity — is becoming strategic again in the AI era.

Looking Ahead

HBM4 shipments mark an important step, but the real test will be adoption at scale. Performance benchmarks, production yields, and partnerships with AI chip manufacturers will determine whether Samsung can translate this momentum into long-term leadership.

As the AI boom shifts from experimentation to infrastructure build-out, memory technology is quietly becoming one of the most important battlegrounds — and Samsung is making it clear it intends to compete.

and then

and then