Source: Mike via Adobe Stock

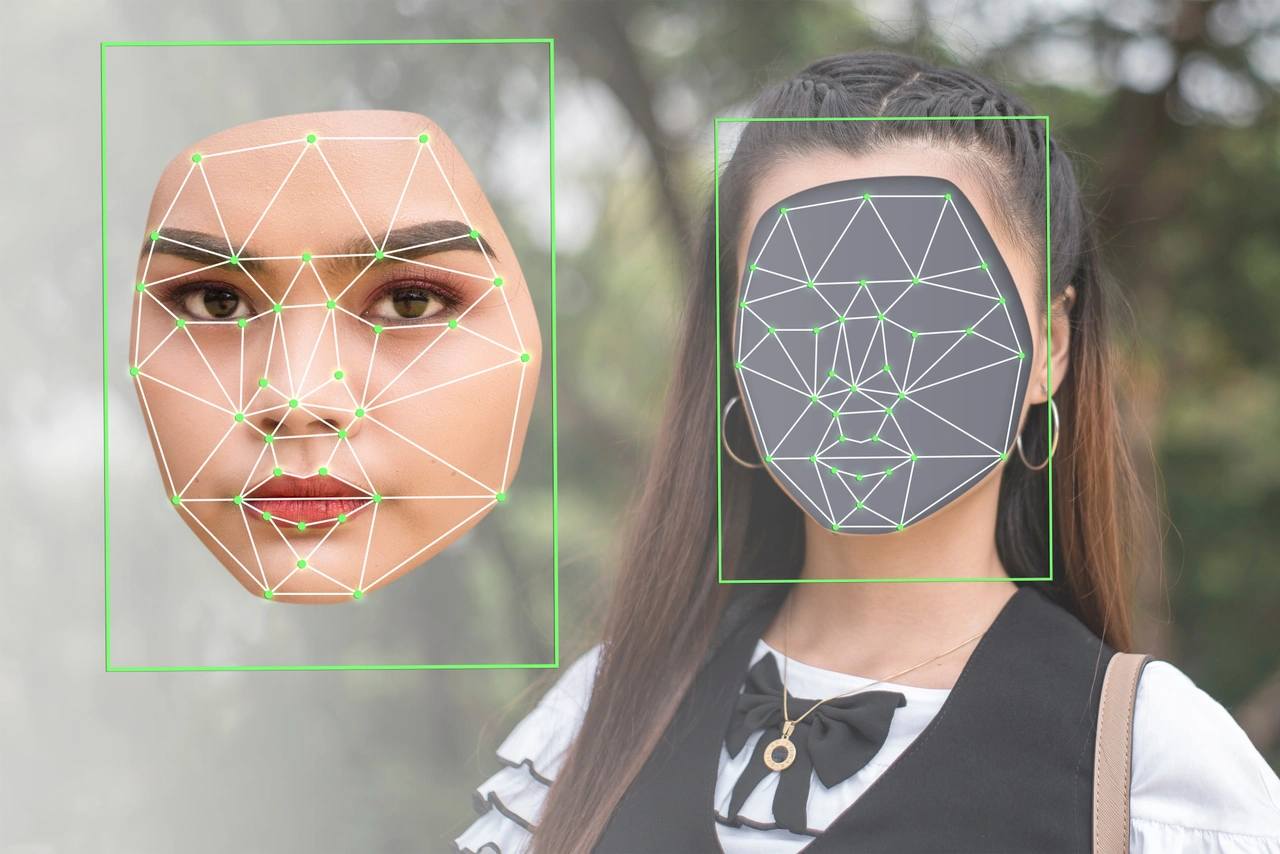

As the tech space advances, so do the challenges associated with privacy and personal rights. One of the most disturbing issues that has arisen is the creation and dissemination of deepfake pornography, which uses generative AI tools to create synthetic images resembling real individuals. On September 5, 2024, Microsoft announced a significant initiative to combat this problem, offering victims a new way to remove such images from its Bing search engine.

Partnering with StopNCII to Combat Revenge Porn

Microsoft’s latest effort involves a partnership with StopNCII, an organization dedicated to helping victims of non-consensual intimate image abuse. Victims can now create a digital fingerprint of explicit images—whether real or fabricated—directly on their devices. This fingerprint, also known as a “hash,” enables StopNCII’s partners to identify and remove these images from their platforms. Microsoft’s Bing has joined the ranks of other major platforms like Facebook, Instagram, Threads, TikTok, Snapchat, Reddit, Pornhub, and OnlyFans, all of which use StopNCII’s system to prevent the spread of revenge porn.

Microsoft’s Proactive Measures in Image Removal

In a recent blog post, Microsoft revealed the results of a pilot program conducted through August, during which it actioned 268,000 explicit images identified via Bing’s image search using StopNCII’s database. This marks a significant step beyond their previous system, which involved direct reporting by users. Reflecting on the limitations of user reports, Microsoft emphasized the need for more scalable and impactful solutions, recognizing that user reporting alone might not be sufficient to address the risks associated with these images appearing in search results.

Comparing Tech Giants: Microsoft and Google

The move by Microsoft throws a spotlight on the efforts—or lack thereof—by other tech giants, notably Google. While Google Search also offers tools to report and remove explicit content, it has come under fire for not partnering with StopNCII. A recent Wired investigation highlighted criticisms from former employees and victims regarding Google’s standalone efforts, particularly its failure to collaborate with StopNCII. In contrast, Microsoft’s initiative with StopNCII sets a benchmark for industry-wide cooperation.

The Wider Impact of AI-Generated Content

The issues extend beyond adults, with the proliferation of “undressing” sites that target even high school students, creating a nationwide concern. The lack of a federal AI deepfake pornography law means the U.S. must rely on varied state and local legislation to tackle these challenges. This fragmented approach underscores the urgency for comprehensive legal frameworks that can keep pace with technological advancements and protect individuals from new forms of digital abuse.

Looking Ahead: The Role of Technology and Law

As Microsoft takes steps to mitigate the damage caused by deepfake pornography, the conversation inevitably turns towards the broader implications for privacy, security, and legal accountability in the digital realm. The development of advanced AI technologies necessitates equally advanced solutions to prevent misuse. Moreover, this scenario highlights the need for legislative progress that can provide a unified response to the unique challenges posed by digital content abuse.

Microsoft’s collaboration with StopNCII represents a significant advancement in the fight against digital exploitation. As other platforms and lawmakers observe these developments, the hope is that more comprehensive and unified actions will be undertaken to ensure that the digital world remains a safe space for all users. The push from companies like Microsoft could be the catalyst needed for broader legal and technological solutions that will address and hopefully eradicate these invasive practices.

and then

and then